This summer was filled with plenty of (dare I say it) failures, but enough successes to illustrate the evolution of my work in the studio. And while I had hoped to have a kind of concrete something to evidence the labors of my summer here, much of what I am leaving this fellowship with seems to be base skills that I would have otherwise never had the time to learn.

In my first blog post I wrote about the frustrations of figuring out how to make systems talk to each other. A lot of my early work in the studio included troubleshooting different programs and figuring out ways around various road blocks—programs that were outdated, for example, and no longer interacted with other systems needed for motion capture; and how to explore motion capture without purchasing thousands of dollars-worth of motion capture accessories.

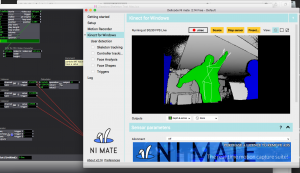

After several weeks of exploring many different systems and programs, I finally found the right combination that allowed me to begin my work. By using a program called Delicode NI mate, I was finally able to capture skeletal data and send the information to my node-programming software, Isadora:

NI mate allowed the Xbox Kinect to capture data from skeletal data points, sending the information to Isadora where the data could be manipulated to trigger or inform given events within the program. Now, as the screenshot illustrates, you can see that there are some inaccuracies in the way the Kinect picks up skeletal positioning in space. It tends to get confused when body parts move too quickly, and when body parts not well-defined. This way of picking up movement created problems when I started to explore movement as it pertained to the manipulation of digital particles on a screen; sometimes the Kinect would start to glitch similar to the picture above, which would cause the digital imagery on the screen to start to act up as well. Below you can see an example of this in the particle generator I created in Isadora. I chose blooming flowers (made in AfterEffects) to be the “particles” that responded to data that influenced their x,y, and z coordinates as well as the particles’ velocity, transparency, etc. The first example shows the particles responding to movement data in an uninterrupted pattern:

Once the skeletal tracking program gets a bit confused, the manipulated data within Isadora can look something like this:

Figuring out the solution to this problem was challenging, and truth be told I haven’t quite discovered how to eliminate the error in skeletal data tracking with the Kinect-yet. I am continuing to fine-tune the way this particle generator interacts with skeletal data sent from the Kinect. My main goal is for the particles to react smoothly to the skeletal data, moving realistically according to the assigned body parts. Perhaps this goal isn’t totally attainable with my current set up-maybe I need an upgrade in the sophistication of my systems. That’s okay. Thanks to the Digital Scholarship and Publishing Studio, I now know how to troubleshoot and problem solve within these programs, and can work on bringing what I learned this summer to life.