I have been tasked with writing an engaging and honest blog about my work as one of the Digital Scholarship and Publishing Studio fellows but I have a problem. I rarely use the adjective “engaging” to describe my writing. I have, I think, managed to find a creative solution to this particular conundrum. Using something I love, the 1989 adventure movie The Princess Bride, I will review the three biggest challenges I have faced during my summer work at the Studio. I hope you enjoy!

**A Note About Links: When I mention a tool or program I use, I linked a resource from a digital humanities scholar who provides an introduction or overview of the same tool! Happy clicking!**

Method to My Madness: Which Way’s My Way?

My dissertation project examines American Indian civil and legal protest at earthworks and burial sites in the Midwest at the turn of the twentieth century. Pretty cool, right? Though I do not have many digital sources, I have a plethora of textual sources from professional and amateur archaeologists who observed a handful of American Indian people who camped near earthworks and burial sites. Using Google forms and Excel, I translated correspondence from the papers of Ellison Orr, an amateur archaeologist active in Iowa from 1916 to 1951, and Charles Reuben Keyes, the first director of the Iowa Archaeological Survey (1921-1951). I parsed these letters for geographic data in the letter (To and From address) and used categories I created to organize the correspondents. But, what to do with this data? What questions could I ask it? Though I am not a digital methods skeptic, I am not an expert on digital methods and there are moments when I feel genuinely overwhelmed by the possibilities.Social network analysis, textual analysis, and mapping are just a few of the many methods that digital humanities scholars use with data sets like mine! Much like Fezzik, I found myself wondering, “Which way’s my way?”

Collaboration saved my project and my sanity. Nikki White and Rob Shepard, my Studio points of contact, reviewed my data and made some recommendations on the kinds of visualizations I could create. Their expertise has been invaluable. Unfortunately, the data I have would not make an analytically valuable social network visualization. But, I did have interesting geographic data and perhaps with some creativity and effort I could create a dynamic and interactive map!

Data Cleaning: Am I Going Mad?

Armed with a mapping method, I moved to cleaning my data. I used Excel pivot tables to move through my columns and I opened and closed OpenRefine to clean my data. Even though I did not crowd source my data, it was still quite messy. Some messiness was human error–capitalization, spelling, spacing and the like. I was driven to the brink of insanity when forced to grapple with inconsistencies that have nothing to do with spelling or capitalization. Rather, I had to make and document choices about the data in my spreadsheets because the questions changed between when I started gathering the data (last year) and now. Column after column and row after row, I made decisions about each cell. My method of cleaning data is not new or revolutionary. Digital humanities scholars have been publishing excellent scholarship on working with and cleaning data for decades. Three excellent pieces from humanities scholars on the challenges of working with data are:

- Big? Smart? Clean? Messy? Data in the Humanities by Chris Schoch

- Lost in Space: Confessions of an Accidental Geographer by Colin Gordon

- Humanities Approaches to Graphical Display by Johanna Drucker

**If you are a digital novice and the term data makes you feel queasy, I would highly recommend Daniel Rosenberg’s Data before the Fact**

In Lieu of a Conclusion: Where I am Going Next

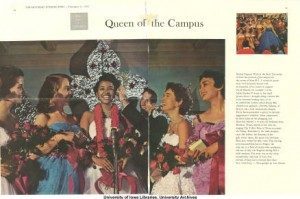

Armed with clean data, I am very excited to work on three visualizations that I hope will help me answer the research question at the heart of my dissertation. For the sake of brevity, I will only describe the first visualization I hope to create. Primary source research has revealed the activism of Emma Big Bear (1869-1968). Emma was a Ho-Chunk woman who resumed residency near the mounds in northeastern Iowa from 1917 to her death in 1968.

Emma Big Bear returned to northeastern Iowa from the Winnebago Reservation in Nebraska around 1917. She and her husband Henry Holt lived in a wickiup, spoke only Ho-Chunk, and lived near the earthworks in northeastern Iowa for almost five decades. Simultaneous to Emma’s site occupation, amateur archaeologists opened earthworks and hunted for artifacts. I hope to visualize Emma’s campsites alongside a map of the sites that artifact collectors frequently targeted. Artifact collectors described the sites they excavated when they wrote to Ellison Orr (mentioned above). Did Emma and Henry ever cross paths with the most active amateur archaeologists in the region? Did she and Henry ever observe the excavations of amateur archaeologists like Ellison Orr, Dale Henning, or Paul Rowe? Using oral histories and amateur archaeologist correspondence, I hope to create an interactive public exhibit using ArcGIS Online based on my data.

Wish me luck!

About Me: My name is Mary Wise and I am a PhD candidate in the history department. My dissertation examines the history of American Indian activism at earthworks and effigy mounds in the Midwest from 1890 to 1950.